Program

Schedule | keynotes | symposia | talks and posters | APCV-i | CiNet-Tour

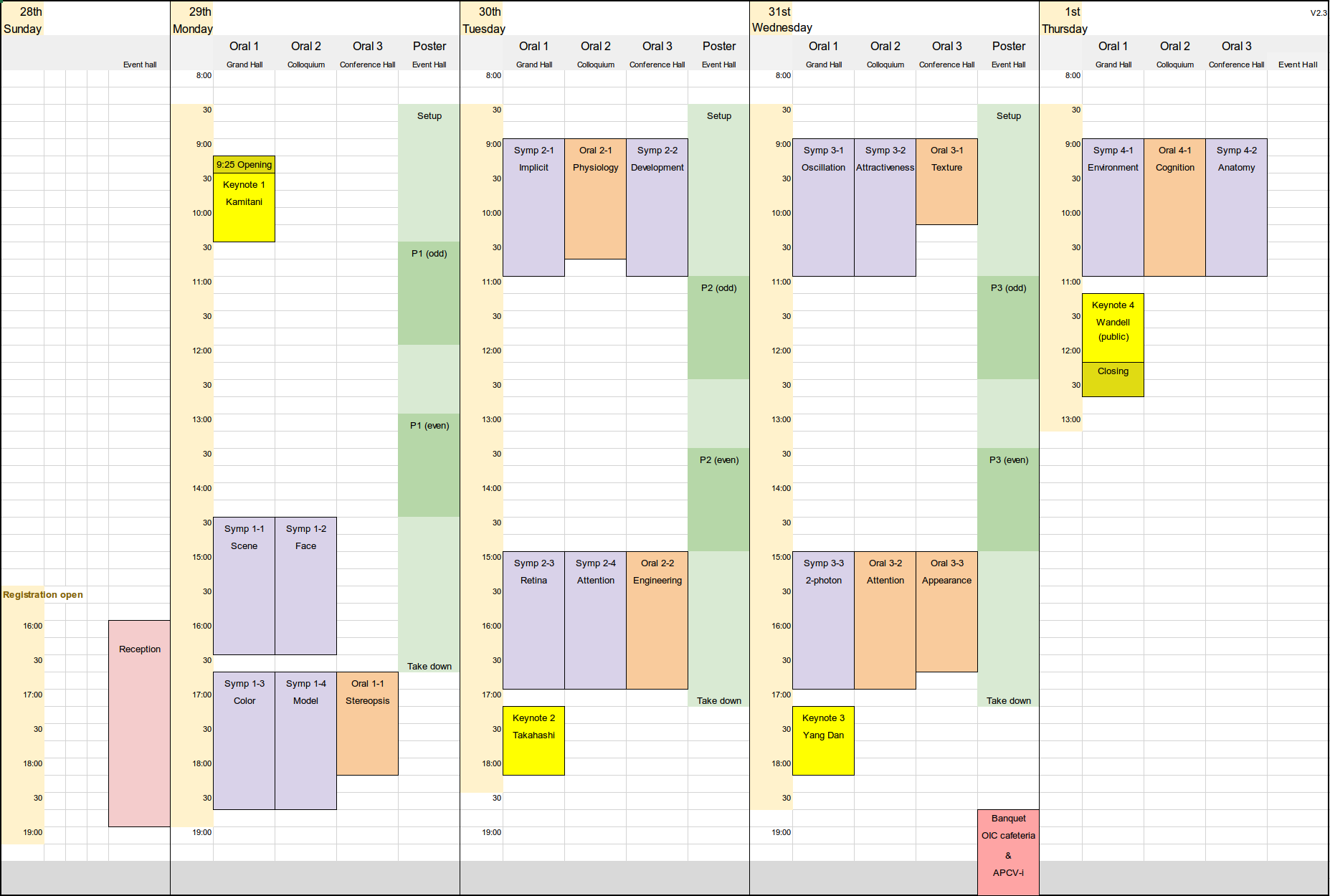

Schedule

Poster will be displayed during the day. Morning for posters with odd number, and Afternoon for posters with even number.

Click to Enlarge

Click to Enlarge

Floorplan is here: Floorplan

Keynotes

Keynote 1

Keynote 1Monday, 9:30 AM - 10:30 AM

Reconstructing visual and subjective experience from the brain

Yukiyasu Kamitani (Kyoto University and ATR Computational Neuroscience Laboratories)

Yukiyasu Kamitani, Ph.D.. Professor at Kyoto University and Department Head and ATR Fellow at ATR Computational Neuroscience Laboratories. He received B.A. in Cognitive Science from University of Tokyo, and Ph.D. in Computation and Neural Systems from California Institute of Technology. He continued his research in cognitive and computational neuroscience at Harvard Medical School and Princeton University. In 2004 he joined ATR Computational Neuroscience Laboratories, and since 2015 he is Professor at Kyoto University. He is a pioneer in the field of "brain decoding", which combines neuroimaging and machine learning to translate brain signals to mental contents. He was named Research Leader in Neural Imaging on the 2005 “Scientific American 50”, and received many awards including Tsukahara Memorial Award (2013), JSPS Prize (2014), and Osaka Science Prize (2015). In 2018, he was selected as an ATR Fellow. He is recently engaged in activity in contemporary art with Pierre Huyghe (“UUmwelt” at Serpentine Galleries, London, 2018) and other artists.

Moderator: Shin'ya Nishida (Kyoto University, NTT)

Keynote 2

Keynote 2Tuesday, 17:15 PM - 18:15 PM

Pluripotent stem cell derived photoreceptor transplantation

Masayo Takahashi (Center for Biosystems Dynamics Research, RIKEN)

Now we are investigating how to increase the number of synapses and efficacy of transplantation. However, immunohistochemical characterization of synapse in the degenerated retina or of grafted retina is often challenging, as traits are not as clear as in the wild type retina. Therefore, using postnatal wild type mouse retina as a training data, we developed a new method to objectively count synapses. Using this method, we evaluated the synapses of iPSC-retina after transplantation to rd1 mice. The number of synapses increased on 30 days after transplantation, while we could not found any synapse formation in in vtro retinal organoids. The synapse numbers were more in the light/dark cycle environment than completely dark one.

I will talk about the future strategy of outer retinal transplantation.

Masayo Takahashi M.D., Ph.D. Project leader at Laboratory for Retinal Regeneration, RIKEN Center for Biosystems Dynamics Research. She received her Ph.D. from Kyoto University. After serving as an assistant professor in the Kyoto University Hospital, she moved to the Salk Institute, U.S., where she discovered the potential of stem cells as a tool for retinal therapy. She has joined RIKEN since 2006.

Moderator: Ichiro Fujita (Osaka University)

Keynote 3

Keynote 3Wednesday, 17:15 PM - 18:15 PM

A motor theory of sleep-wake control

Yang Dan (University of California, Berkeley)

Yang Dan, Ph.D. She studied physics as an undergraduate student at Peking University and received her Ph.D. training in Biological Sciences at Columbia University. She did her postdoctoral research at Rockefeller University and Harvard Medical School. She is currently Paul Licht Distinguished Professor in the Department of Molecular and Cell Biology and an investigator of the Howard Hughes Medical Institute at the University of California, Berkeley.

Moderator: Izumi Ohzawa (Osaka University)

Keynote 4 / free public lecture

Keynote 4 / free public lectureThursday, 11:15 AM - 12:15 PM

ISETBIO: Software for the foundations of vision science

Brian Wandell (Stanford University)

Collaborative work with David Brainard, Nicolas Cottaris, Trisha Lian, Zhenyi Liu, Joyce Farrell, Haomiao Jiang, Fred Rieke, and James Golden.

Professor Brian A. Wandell, Ph.D. He is the first Isaac and Madeline Stein Family Professor. He joined the Stanford Psychology faculty in 1979 and is a member, by courtesy, of Electrical Engineering and Ophthalmology. He is Director of the Center for Cognitive and Neurobiological Imaging, and Deputy Director of the Wu Tsai Neuroscience Institute. He graduated from the University of Michigan in 1973 with a B.S. in mathematics and psychology. In 1977, he earned a Ph.D. in social science from the University of California at Irvine. After a year as a postdoctoral fellow at the University of Pennsylvania, he joined the faculty of Stanford University in 1979. Professor Wandell was promoted to associate professor with tenure in 1984 and became a full professor in 1988.

Moderator: Kaoru Amano (CiNet, NICT)

Symposia

Physiological, psychological, and computational foundations of scene understanding

Organizers:

Yukako Yamane (Osaka University, Okinawa Institute of Science and Technology)

Ko Sakai (University of Tsukuba)

Speakers:

Charles Ed Connor (Johns Hopkins University)

Mark Lescroart (University of Nevada, Reno)

Ernst Niebur (Johns Hopkins University)

Steven W. Zucker (Yale University)

Vision science has revealed the nature of human vision and the visual functions in cognition in various ways, although ‘how humans understand an entire scene?’ is still a challenging problem. How does the visual system segregate images into meaningful parts and then assemble those parts into informative representations of the outside world? How do those representations support our immediate, intuitive knowledge about where we are, what things are present, and how those things relate to each other and to the overall scene? About what just happened in the scene, and what might happen next and how we should react for? Recent rapid advancement of machine learning algorithms enabled the identification, description, and even generation of a scene, however, they are still incapable of providing clues for understanding a scene as we humans do. We invite world-leading scientists to discuss the physiological, psychological, and computational foundations of scene understanding.

Objects, Scenes, and Gravity in Ventral Pathway Visual CortexAlexandriya Emonds, Siavash Vaziri, Charles E. Connor Krieger Mind/Brain Institute, Johns Hopkins University

It has long been thought that the ventral pathway is dedicated exclusively to visual object processing, while scene understanding is primarily a dorsal pathway function. However, we reported recently that many neurons in macaque monkey ventral pathway, including a majority in the TEd channel, are far more responsive to large-scale scene structure. These neurons are particularly tuned for tilt and slant of planar surfaces and edges, in ways that suggest they represent the direction of gravity. In new experiments, we have begun to examine how object information and scene information are integrated in the ventral pathway. Early results show that individual neurons can be congruently selective for ground tilt, object tilt, and object balance (distribution of mass with respect to points of ground contact). This is consistent with the theory that visual cortex serves as an intuitive physics engine for understanding the natural world, in particular the energetic potentials and constraints imposed on objects by the ubiquitous force of gravity.

Human Scene-selective Areas Represent the Orientation of and Distance to Large SurfacesMark Lescroart University of Nevada, Reno

A network of areas in the human brain—including the Parahippocampal Place Area (PPA), the Occipital Place Area (OPA), and the Retrosplenial Complex (RSC)—respond to images of visual scenes. Long-standing theories suggest that these areas represent the 3D structure of the local visual environment. However, most experiments that study representation of scene structure have relied on operational or categorical definitions of scene structure—for example, comparing responses to open vs closed scenes. It is not clear, based on such studies, how these areas might respond to scenes that do not fall into one of the investigated categories. Furthermore, it has been hypothesized that sceneselective areas represent 2D cues for 3D structure rather than 3D structure per se. To evaluate the degree to which each of these hypotheses explain variance in scene-selective areas, we develop an encoding model of 3D scene structure and test it against a model of low-level 2D features. Our 3D structural model uses continuous parameters based on 3D data (surface normals and depth maps) rather than human-assigned categorical labels such as “open” or “closed”. We fit the models to fMRI data recorded while subjects viewed visual scenes. Variance partitioning on the fit models reveals that scene-selective areas represent the distance to and orientation of large surfaces, at least partly independent of low-level features. Individual voxels appear to be tuned for combinations of the orientation of and distance to large surfaces. Principal components analysis of the model weights reveals that the most important dimensions of 3D structure are distance and openness. Finally, reconstructions of the stimuli based on our model demonstrate that the model captures unprecedented detail about the 3D structure of local visual environment from BOLD responses in scene-selective areas.

Perceptual Organization and Attention to ObjectsErnst Niebur Solomon Snyder Department of Neuroscience and Zanvyl Krieger Mind/Brain Institute, Johns Hopkins University

One of the most important strategies of dealing with the extremely high complexity presented by the visual signal from a cluttered scene is to organize the input into perceptual objects. The task of scene understanding is then transformed from interpreting ~10^6 rapidly changing input signals in terms of a much smaller number of spatio-temporal patterns that mostly correspond to structures in the real world, and are constrained by physical laws. This task is, however, highly non-trivial and requires to group those elements of the raw input that correspond to the same object, and segregate them from those corresponding to other objects and the background. We propose that primates solve this perceptual organization task using small populations of dedicated neurons that represent different objects. We note that this segregation process does not require the formation of fully-defined recognizable objects: computational models show that this can be accomplished on perceptual pre-cursors of objects with very simple properties that we call proto-objects. Key features of the models are "grouping" neurons integrating local features into coherent proto-objects and excitatory feedback to the same local feature neurons which caused the associated proto-object’s grouping neuron's activation. Organization of the scene into proto-objects thus transforms the seemingly impossible task of scene understanding into manageable sub-tasks. For instance, object recognition can then proceed in a sequential fashion, by operating on one proto-object at a time. A more general mid-level task is attention to objects and the model explains how attention can be directed (top-down) to objects even though the central structures that control top-down attention do not have a representation of the detailed features of these objects.

How Interactions Between Shading and Color Inform Object and Scene UnderstandingSteven W. Zucker Department of Computer Science, Yale University

What is the shape of an apple, and what color is it?

These are instances of classical questions about object perception: (i) how is it possible

that we can infer the three-dimensional shape of an object (e.g., an apple) from its shading,

and (ii) how is it possible that we can separate the intensity changes due to material effects

(the apple's pigmentation) from intensity changes due to shading. Clearly mistakes in

solving (ii) would impact (i). Importantly, these two problems arise in scene perception as

well. Objects are described by surfaces and their parts; scenes are described by surface

arrangements and their interactions. For example, when walking along a path through

the woods, where is the ground surface and why do shadows not effect its perceived

shape? Again, mistakes would impact performance if shadows were interpreted as holes.

We introduce a computational approach to these questions grounded in basic

neurophysiology and computational theory. Regarding problem (i), we outline a novel

approach to shape-from-shading inferences that is based on visual flows, a mathematical

abstraction of how information is represented in visual cortex. Regarding (ii), we introduce

a model of color representation (hue flows) also based on visual cortex, that is analogous

to the shading flows. Then, given both flows, we can determine when hue co-varies

generically with shading, thereby addressing problem (ii) and implying a material effect. We

demonstrate psychophysically how hue flows can be designed to alter shape percepts, and

we demonstrate computationally how hue flows pass through cast shadows. Taken

together, key aspects of our abilities to wander through scenes and to describe and

manipulate objects are both supported by the foundational interactions of shading and hue

flows.

Symposium 1-2

Unpacking cognitive and neural mechanisms underpinning the recognition and representations of unfamiliar and familiar faces and facial expressions: behavioral, eye movement and ERP studies

Organizer:

Kazuyo Nakabayashi (University of Hull)

Speakers:

Sakura Torii (Kobe Shoin Women’s University)

Kazuyo Nakabayashi (University of Hull)

Alejandro Estudillo (University of Nottingham)

Christel Devue (Victoria University of Wellington)

Holger Wiese (Durham University)

This symposium addresses some of the key debates in studies of face recognition and expressions. Here, we provide novel evidence demonstrating perceptual, cognitive and neural mechanisms underlying the representations and recognition of familiar and unfamiliar faces, and facial expressions across a range of paradigms. One study concerns the role of facial features in detecting facial expressions through manipulation of spatial frequencies. Two studies report perceptual matching of unfamiliar faces, with one study concerning effects of culture (Japanese vs. British) on eye movements, inversion effects and Thatcherization. The other study sheds light on the relative contribution of featual and holistic processing to same- and different-identity matching. The two remaining studies investigate cognitive and neural representations of familiar faces, with one study manipulating appearance of famous faces and their popularity in order to elucidate the representations of familiar faces in the cognitive system. The other study measures event-related brain potentials (N250) to reveal how semantic and visual information may interact, giving a rise to the recognition of familiar faces. The symposium will provide a comprehensive view towards how faces and expressions are processed and stored in memory.

Three dimensional configuration and spatial frequency properties of facial expressionSakura Torii Kobe Shoin Women’s University

The features of happy, angry, sad and surprised faces were studied. In the happy face, it was found that distinct characteristic changes occurred around the cheeks by the three dimensional analysis, and that the deviation range of gray level was wider than that measured in other faces by gray level analysis. By face recognition experiment using frequency-filtered face images, it was found that only happy face was easily recognized than other faces even under low-pass condition. These results suggested that emphasizing the contrast in a wide region of the facial surface increased the three-dimensional features, which possibly resulted in the selective enhancement of the “happy face features”. I foresee new make-up foundations that can only emphasize and develop the “happy face feature”.

The role of inversion and Thatcherization in matching own- and other-race facesKazuyo Nakabayashi University of Hull

In the Thatcher illusion a face in which the eyes and mouth are inverted looks grotesque when shown upright but not when inverted. Two experiments examined the contribution of feature-based and configural processing to matching normal and Thatcherized pairs of isolated face parts (i.e., the eyes and mouth), and how perceptual matching would be influenced by the race of face and inversion (better performance for upright than inverted faces). White British and native Japanese groups made same/different judgements to isolated face parts. Experiment 1 had the same identity pairs, encouraging feature-based processing while Experiment 2 having different identity pairs, which induced configural. Across experiments, effects of inversion and Thatchierzation were found, but these effects varied depending on the race of observer and the race of stimulus face. In addition, eye movements demonstrated increased sampling of inverted compared with upright faces and for Thatcherized compared with normal faces. The findings demonstrate that perceptual biases, shaped by culture-specific strategies and task-based attentional demands, can determine sensitivity to feature-based and local configural information during perceptual encoding.

Matching faces: the facial features are important, but so does the whole faceAlejandro J. Estudillo, Nate Frida University of Nottingham

Matching two unfamiliar faces is of paramount importance in forensic scenarios but the cognitive mechanisms behind this task are poorly understood. In fact, in contrast to the notion that faces are processed at holistic level, it has been suggested that face matching is driven by featural processing. The present study looks to shed light on this issue by exploring the role of holistic and featural processing for match (i.e., both faces depict the same identity) and mismatch (i.e., both faces depict different identities). Across two experiments, observers were asked to decide whether a pair of faces depicted the same or two different identities, while their eyes were being tracked. In Experiment 1, a gaze-contingent paradigm was used to manipulate holistic/featural processing. In Experiment 2, in addition to a standard face matching task, observers also performed a part/whole task, which provides an index of holistic processing. Results showed that both holistic and featural processing are important for face matching and that neither of them individually suffices for face matching.

When Brad Pitt is more refined than George Clooney: The role of stability in developing parsimonious facial representationsChristel Devue Victoria University of Wellington

Most people can recognise large numbers of faces, but the facial information we rely on is unknown despite decades of experimentation. We developed a theory that assumes representations are parsimonious and that different information is more or less diagnostic in individual faces, regardless of familiarity. Diagnostic features are those that remain stable over encounters and so receive more representational weight. Importantly, coarse information is privileged over fine details. This creates cost-effective facial representations that may refine over time if appearance changes. The theory predicts that representations of people with a consistent appearance (e.g., George Clooney) should include stable coarse extra-facial features, and so their internal features need not be encoded with the same high resolution as those of equally famous people who change appearance frequently (e.g., Brad Pitt). In three preregistered experiments, participants performed a recognition task in which we controlled appearance of actors (variable, consistent) and their popularity (higher, lower). Consistent with our theory, in less popular actors, stable extra-facial features helped remember consistent faces compared to variable ones. However, in popular actors, representations of variable actors had become more refined than those of consistent actors. We will discuss broader implications of our theory for the field.

The sustained familiarity effect: a robust neural correlate of familiar face recognitionHolger Wiese1, Simone C. Tüttenberg1, Mike Burton2, Andrew Young2 1Durham University, 2University of York

Humans are remarkably accurate at recognizing familiar faces independent of a particular picture. However, cognitive neuroscience has largely failed to show a robust neural correlate of image-independent familiar face recognition. Here, we examined event-related brain potentials elicited by highly personally familiar (close friends, relatives) and unfamiliar faces. We presented multiple “ambient” images per identity, varying naturally in lighting, viewing angles, expressions etc. Familiar faces elicited more negative amplitudes in the N250 (200–400 ms), reflecting the activation of stored face representations. Importantly, an increased familiarity effect was observed in the subsequent 400-600 ms time range. This Sustained Familiarity Effect (SFE) was reliably detected in 84% of individual participants. Additional experiments revealed that the SFE is smaller for personally, but less familiar faces (e.g., university lecturers) and absent for celebrities. Moreover, while the N250 familiarity effect does not strongly depend on attentional resources, the SFE is reduced when attention is directed away from the faces. We interpret the SFE as reflecting the integration of visual with additional person-related (e.g., semantic, affective) information needed to guide potential interactions. We propose that this integrative process is at the core of identifying a highly familiar person.

Symposium 1-3

Recent studies on the mechanisms of color vision and its role in the society.

Organizers:

Ichiro Kuriki (Tohoku University)

Kowa Koida (Toyohashi University of Technology)

Speakers:

Bevil Conway (NIH)

Hisashi Tanigawa (Zhejiang University)

Chihiro Hiramatsu (Kyushu University)

The main topic of this symposium is to introduce the recent progress in the studies on color representation in primate (including human) visual cortex, especially *after* the level of cone-opponent stage, to the vision researchers in physiology, psychophysics, art, and computational field of study in Japan and other countries. Color vision is one of the fundamental features of primate vision. It is used not just in object search and social interactions during the survival of individual, but sometimes give a soul-steering impression in fine arts. The contribution of color on our life is tremendous. On the other hand, descriptions on color-vision mechanisms in most textbooks is stopping at the level of cone-opponent system, while it has been pointed out since early ‘90s that the outputs of cone opponent system do not directly correspond to pure red, blue, green, and yellow sensations (i.e., unique hues). Indeed, there has not been a clear-cut explanation about how our color perception is processed in our brain. However, various possibilities have been proposed on the structure of color-vision and related visual-processing mechanisms in the cortex, which may be related to our color perception (i.e., subjective experience of color appearance). Three leading studies on this topic will be introduced in this symposium.

Firstly, Dr. Conway will introduce recent studies on cortical structure and functionality for the processing of color and related high-level visual features in primate cortex. Secondly, Dr. Tanigawa will introduce their recent study on the neural structure of color-vision processing mechanisms in early visual cortex. Finally, Dr. Hiramatsu will introduce the recent studies on the polymorphism of color vision in primates using genetical and behavioral approach.

Bevil Conway National Institutes of Health

What is color for and how are color operations implemented in the brain? I will take up this question, drawing upon neurophysiological recordings in macaque monkeys, fMRI in humans and monkeys, psychophysics, and color-naming in a non-industrialized Amazonian culture. My talk will have three parts. First, I will discuss results showing that the neural implementation of color depends on a multi-stage network that gives rise to a uniform representation of color space within a mid-level stage in visual processing. Second, I will describe work suggesting that color is decoded by a series of stages within higher-order cortex, including inferior temporal cortex and prefrontal cortex (PFC). In a surprising twist, these discoveries reveal a general principle for the organization and operation of inferior temporal cortex and provide evidence for a stimulus-driven functional organization of PFC. Finally, I will describe two recent discoveries prompted by our neurobiological discoveries: a new interaction of color and face perception, which suggests that color evolved to play an important role in non-verbal social communication; and a universal pattern in color naming that reflects the color statistics of those parts of the world that we especially care about (objects). Together, the work supports the provocative idea that basic color categories are an emergent property arising from the needs we place on the brain (including object recognition and the assignment of object valence), rather than a constraint determined by color encoding.

Hue maps of DKL space at columnar resolution in macaque early visual cortexHisashi Tanigawa Zhejiang University

It is a fundamental question about color vision how cone signals are transformed into perceptual colors in the cortex. Previous studies revealed functional structures for color processing in the early visual cortex, such as CO blobs in V1, CO thin stripes in V2, and color-sensitive domains in V4, and these structures are thought to play an important role in the transformation of cone signals. However, it is not known how hue selectivities based on cone opponency is organized as spatial arrangement in early visual cortex. Here we performed optical imaging in macaque V1, V2, and V4 to examine distribution of domains selective to individual hues of Derrington-Krauskopf-Lennie (DKL) color space that is based on the cone-opponent axes of the lateral geniculate nucleus (LGN). We presented visual stimuli with isoluminant color/gray or color/color stripes: the hue of color stripes was chosen from eight evenly spaced directions in an isoluminant plane of the DKL color space, in which four of them were along the L-M and S-(L-M) cardinal axes. In this talk, I will first introduce our past results of optical imaging in V4 and then show recent results of imaging in early visual cortex using the DKL color space.

Polymorphic nature of color vision in primatesChihiro Hiramatsu Kyushu University

Although mammals generally have dichromatic color vision, primates have uniquely evolved trichromatic vision. However, not all primates possess uniform trichromatic vision, and diverse color vision is ubiquitous in many Neotropical primates and human populations owing to polymorphisms of red-green visual pigment genes. The biological significance of polymorphic color vision and how it influences differences beyond perception are not fully understood. In this talk, I will present how color vision is polymorphic at genetic and perceptual levels, and how these traits affect behavior and even aesthetic impression. Then, I would like to discuss the potential influences of experience and social interaction, which may modify conscious perception of colors.

Symposium 1-4

Modeling approaches to visual circuit function, pathology, and regeneration.

Organizers:

Katsunori Kitano (Ritsumeikan University)

Speakers:

Masao Tachibana (Ritsumeikan University)

Katsunori Kitano (Ritsumeikan University)

Alexander Sher (University of Calfornia, Santa Cruz)

The main topic of this symposium is to show how a combination of highly quantitative measurements and mathematical modeling can lead to insights into higher order visual processing in the retina, retinal rhythmogenesis, and the mechanisms that underlie the re-establishment of retinal connections. In the first talk, Dr Tachibana will describe a novel mechanism through which eye movements can dramatically change the group signaling properties of the ganglion cell network, altering its informational content. Second, Dr. Kitano will present a mathematical model for an unexpected oscillatory activity in degenerating retina. The model suggests a source for the abnormal oscillations, and may allow us to devise therapeutic interventions. Finally, Dr Sher will present a quantitative analysis of how connectivity is reestablished in the adult outer retina follow the removal of a subset of photoreceptor (rod and cone) targets.

Rapid and coordinated processing of global motion images by local clusters of retinal ganglion cellsMasao Tachibana Ritsumeikan University

Our visually perceived world is stable, irrespective of incessant motion of the retinal image due to the movements of eyes, head, and body. Accumulating evidence indicates that the central nervous system may play a key role for stabilization of the visual world. Fixational and saccadic eye movements cause jitter and rapid motion of the whole retinal image, respectively. However, it is not yet evident how the retina processes visual information during eye movements. Furthermore, it is not clear whether multiple subtypes of retinal ganglion cells (GCs) send visual information independently or cooperatively. We performed multi-electrode recordings and whole-cell clamp recordings from ganglion cells (GCs) of the retina isolated from goldfish. GCs were physiologically classified into six subtypes (Fast/Medium/Slow, transient/sustained) based on the temporal profile of the receptive field (RF) estimated by reverse correlation method. We found that the jitter motion of a global random-dot background induced elongation and sensitization of the spatiotemporal RF of the Fast-transient GC (Ft GC). The following rapid global motion induced synchronous firing among local Ft GCs and cooperative firing with precise latencies among adjacent specific GC subtypes. Thus, global motion images that simulated fixational and saccadic eye movements were processed in a coordinated manner by local clusters of specific GCs. Stimulus conditions (duration, area, velocity, and direction of motion) that altered the properties of the receptive field (RF) were consistent with the characteristics of in vivo goldfish eye movements. The wide-range lateral interaction, possibly mediated by electrical and GABAergic synapses, contributed to the RF alterations. These results indicate that the RF properties of retinal GCs in a natural environment are substantially different from those under simplified experimental conditions. Processing of global motion images is already started in the retina and may facilitate visual information processing in the brain.

Normal and pathological states generated by dynamical properties of the retinal circuitKatsunori Kitano Ritsumeikan University

The retina numerous subtypes of neurons each of which, when embedded in a circuit, exhibits different dynamical properties. These dynamical properties can influence the output of the retina under normal conditions, and may also play a role in establishing aberrant rhythms under pathological conditions. Indeed, an understanding the dynamical properties of pathological states may help us to understand dynamic neural mechanisms under more normal conditions. Compared to normal adult retina which lacks oscillatory activity, the retina of the rd1 (retinal degeneration 1) mouse is known to exhibit spontaneous, low frequency (<10 Hz), oscillations. Two potential mechanisms for the spontaneous oscillation have been proposed. One mechanism involves the properties of a gap junction network between cone bipolar cells (BCs) and AII amacrine cells (AII ACs) and between AII-ACs (Trenholm et al., 2012), whereas in the other, the oscillations arise from the intrinsic properties of AII-ACs (Choi et al., 2014). We studied the mechanism of spontaneous oscillation using a computational model of the AII-AC, BC, and GC network. In particular, to solve the paradoxical phenomenon mentioned above, we incorporated a ribbon synapse model at the BC-GC synapse. Even when bipolar cells are held in the depolarized state, neurotransmitter release was not always enhanced because of short term depression (Tsodyks and Markram, 1997). If we assume upregulation of the synapses in the inner plexiform layer of the rd1 retina (Dagar et al., 2014), our model could reproduce both the normal and abnormal neural states in the absence of light stimuli: no response in the normal retina and spontaneous rhythmic activity in the abnormal retina.

Restoration of selective connectivity in adult mammalian retinaAlexander Sher University of California, Santa Cruz

Specificity of synapses between neurons of different types is essential for the proper function of the central nervous system. While we have learned much about formation of these synapses during development, the degree to which adult CNS can reestablish specific connections following injury or disease remains largely unknown. I will show that specific synaptic connections within the adult mammalian retina can be reestablished after neural injury. We used selective laser photocoagulation to ablate small patches of photoreceptors in-vivo in adult rabbits, ground squirrels, and mice. Functional and structural changes in the retina at different time points after the ablation were probed via electrophysiology and immunostaining accompanied by confocal imaging. We found that deafferented rod bipolar cells located within the region where photoreceptors were ablated restructure their dendrites. New dendritic processes extend towards surrounding healthy photoreceptors and establish new functional synapses with them. To test if specific connectivity can be reestablished, we observed restructuring of deafferented S-cone bipolar cells that synapse exclusively with S-cone photoreceptors in the healthy retina. We discovered that deafferented S cone bipolar cells extend their dendrites in random directions within the outer plexiform layer. If the extended dendrite encounters a healthy S-cone, it forms a synapse with it. At the same time, it passes M-cone photoreceptors without making synapses. Finally, we used transgenic mice to investigate molecular mechanisms behind the observed restructuring. Our results indicate that the adult mammalian retina retains the ability to make new specific synapses leading to reestablishment of correct neural connectivity.

Symposium 2-1

On the border of implicit and explicit processing

Organizers:

Shao-Min (Sean) Hung (James Boswell Postdoctoral Scholar, California Institute of Technology)

Speakers:

Shinsuke Shimojo (California Institute of Technology)

Shao-Min (Sean) Hung (California Institute of Technology)

Daw-An Wu (California Institute of Technology)

Naotsugu Tsuchiya (Monash University)

Implicit processing plays an important role in maintaining visual functions. After all, at a given moment, our phenomenal experience is inherently limited by various factors, including attention, working memory, etc. In the current proposal, we will tackle major questions in the field and challenge intuitions on implicit/unconscious processing. These questions include the fundamental relation between attention and consciousness, using the level of visual processing as a delineation of explicit and implicit processing, and how implicit decision making perturbs the explicit sense of agency.

Naotsugu Tsuchiya will show recent findings on how attention tracks suppressed stimulus under binocular rivalry. Shao-Min Hung will provide evidence from unconscious language processing, substantiating high-level implicit processing. Daw-An Wu further discusses how TMS alters our attribution of motor decision making.

These topics will be integrated by Shinsuke Shimojo, providing an overall view of the current challenges and advances in the field, including “postdiction.” Some of these challenges can be better dealt with once we are equipped with more suitable views on implicit processing, such as a dynamic interaction among visual items across time, utilizing both predictive and postdictive factors.

Shinsuke Shimojo Gertrude Baltimore Professor of Experimental Psychology, California Institute of Technology

Can the implicit level of mind execute only simple sensory/cognitive functions? And is the bottleneck to consciousness single, or multi-gated? These questions are elusive, especially considering examples such as implicit semantic priming, and implicit stroop effect (Hung talk in this symposium). I will aim for taxonomy and integration of related findings including my own, to have a clearer overview. First, there are multiple definitions of implicit processing on top of “subliminal”, as exemplified in causal misattribution in action (Wu talk), and attention vs. consciousness (Tsuchiya talk). Second, the implicit/explicit distinction will NOT map onto the lower-/higher-levels of cognitive function (Hung talk). Rather, there are multiple gates to consciousness as indicated in the binocular rivalry debate (80s, up to now), and also quick interplays between implicit and explicit processes. Third, the implicit process may be dynamic spreading over time, operating predictively and postdictively. Auditory-visual “rabbit” effect would be a great example where implicit postdictive process leads to a conscious percept (Shimojo talk),. The implicit process is also based on separate dynamic sampling frequencies. Some evidence comes from interpersonal bodily and neural synchrony (Shimojo talk), and dependence of perceptual changes upon allocation of attention relying on different temporal frequencies (Tsuchiya talk). Thus all together, we may need to abandon several simplistic ideas of implicit processes, and rather take a more dynamic and interactive view.

Attention periodically samples competing stimuli during binocular rivalryNaotsugu Tsuchiya Monash University

The attentional sampling hypothesis suggests that attention rhythmically enhances sensory processing when attending to a single (~8 Hz), or multiple (~4 Hz) objects. Here, we investigated whether attention samples sensory representations that are not part of the conscious percept during binocular rivalry. When crossmodally cued toward a conscious image, subsequent changes in consciousness occurred at ~8 Hz, consistent with the rates of undivided attentional sampling. However, when attention was cued toward the suppressed image, changes in consciousness slowed to ~3.5 Hz, indicating the division of attention away from the conscious visual image. In the electroencephalogram, we found that at attentional sampling frequencies, the strength of inter-trial phase-coherence over fronto-temporal and parieto-occipital regions correlated with changes in perception. When cues were not task-relevant, these effects disappeared, confirming that perceptual changes were dependent upon the allocation of attention, and that attention can flexibly sample away from a conscious image in a task-dependent manner.

Language processing outside the realm of consciousnessShao-Min (Sean) Hung1,2 1California Institute of Technology, 2Huntington Medical Research Institutes

The concept “Out of sight, out of mind” has been repeatedly challenged by findings that show visual information biases behavior even without reaching consciousness. However, the depth and complexity of unconscious processing remains elusive. To tackle this issue, we examined whether high-level linguistic information, including syntax and semantics, can be processed without consciousness.

Using binocular suppression, we showed that after a visible sentential context, a subsequent syntactically incongruent word broke suppression and reached consciousness earlier. Critically, when the sentential context was suppressed while participants made a lexical decision to the subsequent visible word, faster responses to syntactically incongruent words were obtained. Further control experiments show that (1) the effect could not be explained by simple word-word associations since the effect disappeared when the subliminal words were flipped and (2) the effect occurred independent of accurate localization of the subliminal text.

In another study we utilized a “double Stroop” paradigm where a suppressed colored word served as a prime while participants responded to a subsequent visible Stroop word. In the word-naming task, we showed that word but not color inconsistency slowed down the response time to the target, suggesting that semantic retrieval was prioritized. However, when asked to name the color, the same effect was obtained only after a significant practice effect on the color naming (i.e. reduction of response time) occurred, suggesting a competition of attentional resources between the current conscious task and unconscious stimulus. These findings were later replicated in separate experiments.

Across multiple studies we showed that high-level linguistic information can be processed unconsciously and exert an effect. These findings push the limit of unconscious processing and further show that an interplay between conscious and unconscious processing is crucial for such unconscious effect to occur.

Daw-An Wu California Institute of Technology

We generally assume that intentions and decisions cause our voluntary acts: We form a conscious intention to do something, and then this mental act leads to a bodily act. Neuroscientific research into the timeline of volition faces the challenge of measuring and reconciling events along many unstably related timelines - external, neural and mental. We use motor TMS stimulation to create a reference event, allowing for single-trial temporal order judgements to be meaningful across all the timelines.

1) We use electromyography (EMG) to monitor the participant’s (e.g.) thumb. 2) TMS is targeted to motor cortex so as to elicit an involuntary thumb movement. 3) The participant is asked to relax, and at a time of their own choosing, to flex their thumb (a voluntary movement). When the EMG detect the initiation of this movement, it triggers the TMS to activate.

In many cases, the participants report that the TMS click and its resulting thumb movement happened prior to their own volition. Some describe it as if the machine was reading their mind, and just as they were about to decide to act, the TMS beat them to it. The way we have set up the system, however, the TMS cannot be triggered until the voluntary muscle movement has physically begun.

The initiation of a voluntary act is not a discrete, early event to which we have direct mental access. Instead, it is a process that continues to consolidate after the initiation of movement. Our perception of our intentions depends not only on neural signals generated at initiation onset, but also on the integration of information gathered later. This may be analogous to the role of re-entrant feedback to visual cortex in visual consciousness. Contrary to the Cartesian assumption that our introspective awareness is direct, our sense of agency is inferred based on predictive and postdictive inferences about its most likely cause.

Symposium 2-2

The early development of face and body perception

Organizers:

Jiale Yang (Department of Life Sciences, University of Tokyo)

Yumiko Otsuka (Department of Humanities and Social Sciences, Ehime University)

Speakers:

Naiqi G. Xiao (Princeton University)

Sarina Hui-Lin Chien (China Medical University)

Elena Nava (University of Milan-Bicocca)

Jiale Yang (University of Tokyo)

Masahiro Hirai (Jichi Medical University)

Human possess remarkable capacities to process face- and body-related signals. Prior studies consistently reported visual sensitivities to face and body at birth (e.g., Filippetti et al., 2013; Johnson et al., 1991). Moreover, culture specific experience shapes the development of the visual system to develop expertise for specific types of faces and bodies (e.g., own-race faces and communicative body gestures). Furthermore, it is well known that the development of face and body perception is at the foundation of more complex perceptual and cognitive abilities, such as learning and social skills. In this symposium, we will present 5 talks focusing on the early development of face and body perception from infancy to childhood by using a broad range of research methods: skin conductance, electroencephalogram (EEG), eye-tracking, and psychophysics measurements.

Xiao will show how experience of face-race determines early development of infants’ social perception, social learning, and stereotype formation. Chien will show that the pervasive own-race face experience shapes the development of fine-grained and efficient face perception across childhood, which further links to biased social development in childhood. Nava examines the development of multisensory integration from early infancy to childhood and its contribution to the development of body representation. Yang will show tactile information facilitates visual processing in infants, and how body representation modulates this multisensory enhancement. Hirai will talk about infants’ perception of body movements and bodily gestures, and its role in social learning.

In sum, this symposium brings together the latest findings regarding face and body perception across various stages of life and in different culture settings. These studies shed insights into the current advances and future directions of the field of early development of face and body perception.

Naiqi G. Xiao Princeton University

Convergent evidence shows that experience shapes early perceptual development. For example, infants who grow up in a mono-racial environment would develop biased perceptual capabilities for own- vs. other-race faces. However, it is unclear regarding the breadth of experiential impacts on early development. To this end, we explored how face-race experience affects early social development.

In three lines of research, we investigated the impacts of face-race experience in Asian countries, where people mostly see Asian faces, but rarely see faces of other-races. Thus, the mono-racial environment in Asian countries provides an ideal tool to examine whether infants’ social development is biased by asymmetrical face-race experience.

We first studied how face-race affects infants’ social perception via their associations of face-races with different emotional signals (happy vs. sad music). With increased age, infants gradually associated own-race faces with happy music, but other-race faces with sad music, which were evident at 9 months of age. To probe how this biased social perception further influences infants’ social interactions, we investigated infants’ social learning behaviors via learning to follow other’s gaze. Seven-month-olds tended to learn from own- but not other-race adults under uncertain situations. Moreover, similar race-based social learning bias was found when infants learned from multiple own- vs. other-race adults: infants formed a stronger stereotype from a group of other-race adults as opposed to a group of own-race adults. Together, these evidence convergently demonstrate social consequences of asymmetrical face-race experience in infancy. These findings stress the broad experiential impacts on early development beyond perceptual domains.

Sarina Hui-Lin Chien China Medical University

People are remarkable at processing faces. In a split second, one can recognize a person’s identity, gender, age, and race. Importantly, such face processing expertise is not equally prominent for all classes of faces; it works the best for faces belonging to one’s own racial group. In this talk, I will highlight the development and challenges of becoming a native face expert based on my recent studies with Taiwanese children. First, despite many cross-cultural studies reported an early emergence of the own-race advantage (ORA) in the first year of life, adult-like proficiency in discriminating own-race faces is not fully manifested until late childhood. Second, although encoding of race is fast and automatic, categorizing racially ambiguous faces is biased and cognitively taxing. Adults and children with racial essentialist beliefs tend to categorize ambiguous bi-racial faces as other race. Third, when do children judge people by their races? We found that a rudimentary race-based social preference emerges in late preschool years, and the influence of social status becomes increasingly important as children go to elementary school. In sum, the collective findings suggest that our perception of race emerges early in life and continues to develop through childhood. Lastly, the implications for race-based perceptual and social biases and avenues for future research will be discussed.

Multisensory contributions to the development of body representationElena Nava University of Milan-Bicocca

The representation of the body and the sense of body ownership are the product of complex mechanisms, and adult studies have suggested that a crucial role is played by multisensory interactions of body-related signals, such as vision, touch and proprioception. In my talk I will present a series of studies conducted at different stages of development (from infancy to childhood) that suggest that multisensory cues not only shape body representation but play either a facilitating or constraining role depending on age. In particular, I will show that very early in life, infants are able to extract the amodal invariant that is common across senses (e.g., rhythm, tempo), and this predisposes them to be naturally attracted to redundant multisensory stimuli. Infants can also extract the social component conveyed by multisensory stimuli, as observed in a recent study in which we found that 4 months-old infants show less arousing responses (as indexed through skin conductance response) to slow/affective touches coupled with a female face than to multisensory non-social stimuli (a discriminative-type of touch coupled with seeing houses). Interestingly, I will show that later in development, children lack to integrate the senses, and this prevents them from being susceptible to classical multisensory body illusions, such as the rubber hand illusion. Finally, I will show that sensory experience, such as vision, contributes to the development of multisensory interactions, and that lack of visual input – as in congenital blindness – prevents blind individuals to have a typical body representation.

The effect of tactile-visual interactions on body representation in infantsJiale Yang University of Tokyo

The representation of the body, which is closely related to motor control and self-awareness, relies upon complex multisensory interactions. Humans new born have been observed to perceive their own bodies (Rochat, 2010), and recent studies showed that visual tactile interactions facilitate the body perception in the early months of life (Filippetti et al., 2013; Freier et al., 2016). In the present study, we used the steady-state visually evoked potentials (SSVEP) to investigate the development of tactile-visual cortical interactions underlying body representations in infants. In Experiment 1, twelve 4-month-old and twelve 8-month-old infants watched a visual presentation in which a hand was stroked with a metal tube. To elicit the SSVEP, the video flashed at 7.5 Hz. In the tactile-visual condition the infant’s own hand was also stroked by a tube whilst they watched the movie. In the vision-only condition, no tactile stimulus was applied to the infant’s hand. We found larger SSVEPs in the tactile-visual condition than the vision-only condition in 8-month-old infants, but no difference between the two conditions in the 4-month-olds. In Experiment 2, we presented an inverted video to 8-month-old infants. The enhancement of tactile stimuli on SSVEP was absent in this case, demonstrating that there was some degree of body-specific information was required to drive the tactile enhancements of visual cortical processing seen in Experiment 1. Taken together, our results indicate that tactile influences on visual processing of bodily information develops between 4 and 8 months of age.

Development of bodily movement perception in preverbal infantsMasahiro Hirai Jichi Medical University

Understanding another’s actions or behavior is one of the vital abilities that allows us to live in a dynamic and socially fluid world. In this talk, two aspects of body perception in preverbal infants will be discussed. The first aspect concerns the developmental mechanisms that underlie the perception of bodily movements—particularly the visual phenomenon of “biological motion” (Johansson, 1973), whereby our visual system detects various human actions through point–light motion displays. The second aspect concerns the cognitive mechanisms of the communicative aspect of bodily movement. The theory of natural pedagogy (Csibra & Gergely, 2009) proposes that infants use ostensive signals such as eye contact, infant-directed speech, and contingent responsivity to learn from others. However, the role of bodily gestures such as hand-waving in social learning has been largely ignored. We explored whether four-month-old infants exhibited a preference for horizontal or vertical (control) hand-waving gestures. We also examined whether horizontal hand-waving gestures followed by pointing gestures facilitated the process of object learning in nine-month-old infants. Results showed that four-month-old infants preferred horizontal hand-waving gestures to vertical hand-waving gestures. Further, horizontal hand-waving gestures enhanced identity encoding for cued objects, whereas vertical gestures did not. Based on our series of studies on body perception in preverbal infants, I will discuss the developmental model of body perception and its role in social communication.

Symposium 2-3

Novel developmental, metabolic, and signaling mechanisms in the retina

Organizers:

Chieko Koike (Ritsumeikan University)

Steven H. DeVries (Northwestern University)

Speakers:

Wei Li (NIH)

Seth Blackshaw (Johns Hopkins University School of Medicine)

Steven H. DeVries (Northwestern University)

The retina is a laminarly organized, self-contained, and accessible piece of the central nervous system that performs the task of early visual processing. The ability to isolate piece of the nervous system that remains fully functional allows us to examine intact pathways including those that underlie development, metabolism, and neural circuits. This symposium will present recent progress in our understanding of mammalian retinal function and development in the areas of cell fate determination, regeneration, synaptic function, and hibernation by vision researchers in the United States. In this symposium, Dr Seth Blackshaw will exploit both transcriptomics and cross-species comparisons to identify the pathways that are essential for retinal cell fate determination and regeneration capacity. Dr Wei Li will focus on the thirteen-lined ground squirrel and describe metabolic pathway adaptations that permit the retina to tolerate long periods of time at near freezing temperatures during hibernation. Finally, Dr Steven DeVries will focus on the cone circuitry in the cone-dominant retina of the ground squirrel and describe how parallel processing pathways get their start.

Seeing in the cold: vision and hibernationWei Li National Institutes of Health

The ground squirrel has a cone-dominant retina and hibernates during the winter. We exploit these two unique features to study retinal biology and adaptations during hibernation. In this talk, I will discuss an optic feature of the ground squirrel retina, as well as several forms of adaptation during hibernation in the retina and beyond. By exploring the mechanisms of adaptation in this hibernating species, we hope to shed light on therapeutic tactics for retinal injury and diseases, which are often associated with metabolic stress.

Building and rebuilding the retina: one cell at a timeSeth Blackshaw Johns Hopkins University School of Medicine

The retina is an accessible system for identifying the molecular mechanisms that control CNS cell fate specification and is a prime target for regenerative therapies aimed at restoring photoreceptors lost to blinding diseases. I will discuss our recent large-scale single-cell RNA-Seq analysis of multiple vertebrate species that is aimed at identifying gene regulatory networks that drive the acquisition of neuronal and glial identity in the developing retina. I will discuss our identification of transcription factors that control both temporal identity and proliferative quiescence, new tools we and our collaborators have developed to identify core evolutionarily-conserved gene regulatory networks controlling retinal development, and mechanisms controlling injury-induced neurogenic competence in retinal glia.

Parallel signal processing at the mammalian cone photoreceptor synapseSteven H. DeVries Northwestern University

The brain has a massively parallel architecture that supports its prodigious computational abilities. In the visual system, parallel neural processing begins at the cone photoreceptor synapse. At this synapse, an individual cone signals to ~12 anatomically distinct bipolar cell types that comprise two main classes, On and Off, each consisting of about 6 types. To better understand the first steps in parallel visual signaling, we record in voltage clamp from synaptically connected pairs of cones and identified Off bipolar cells in slices from the cone-dominant ground squirrel retina. At the same time, we capture the detailed structure of the recorded synapse using super-resolution microscopy. Our results show how the molecular architecture of the synapse, including the placement of ribbon transmitter release sites, glutamate transporters, and postsynaptic ionotropic glutamate receptors, can enable the flow of different signals to the different bipolar cell types.

Symposium 2-4

Studying attention without relying on behavior

Organizers:

Yaffa Yeshurun (University of Haifa )

Satoshi Shioiri (Tohoku University)

Speakers:

Yaffa Yeshurun (University of Haifa)

Satoshi Shioiri (Tohoku University)

Hsin-I Liao (NTT Communication Science Laboratories)

Hirohiko Kaneko (Tokyo Institute of Technology)

Attention – the selective processing of relevant information at the expense of irrelevant information – has been subject to scientific inquiry for over a century. One fundamental challenge to the study of attention is that most of our current knowledge was established using paradigms that depend on assumptions regarding the fate of unattended information, or rely in some other way on properties of the participants’ responses (e.g., accuracy or response time). Yet, the assumptions on which these paradigms are based may not always hold, and in general participants’ response can be influenced by many other factors than attention allocation, including response history, biases, higher-level strategies, experience, and so on. Moreover, response time, which is likely the most prevalent measurement in attention studies, is also linked to motor preparation, not just perception. Fortunately, several recent studies were set out to study attention with novel and exciting methodologies that do not rely on the participant’s response, and therefore provide a more objective measure of attentional deployment. Some of these novel methodologies rely on measurements of brain activity (e.g., SSVP, ERP) instead of accuracy or response time, while other methodologies rely on pupil size or eye movements. The four presentations included in this symposium illustrate how such methodologies can be utilized to overcome obstacles that prevail with more traditional paradigms.

The characteristics of the attentional window when measured with the pupillary response to lightYaffa Yeshurun University of Haifa

This study explored the spatial distribution of attention with a measurement that is independent of performance - the pupillary light response (PLR), thereby avoiding various obstacles and biases involved in more traditional measurements of spatial covert attention. Previous studies demonstrated that when covert attention is deployed to a bright area the pupil contracts relative to when attention is deployed to a dark area, even though display luminance levels are identical and central fixation is maintained. We used these attentional modulations of the PLR to assess the spread of attention. Specifically, we examined the minimal size of the attentional window and how it varies as a function of target eccentricity and the nature of other non-target stimuli (i.e., distractors). We found that when the target was surrounded by neutral task-irrelevant disks (i.e., bright/dark disks that did not include response-competing information) the attentional window had a diameter of about 2º. However, when the disks included competing information this size could be further reduced. Interestingly, the size of the attentional doesn’t seem to vary as a function of eccentricity, but it is affected by stimuli size. Finally, we also examined whether the spatial spread of attention is influenced by perceptual load. Load levels were manipulated by the degree of stimuli heterogeneity or task complexity. We found that the size of the attentional window was larger when load levels were low than when load levels were high. These findings demonstrate the flexibility and constraints of spatial covert attention.

Differences in attention modulations measured by steady-state visual-evoked potentials and by behaviors.Satoshi Shioiri Research Institute of Electrical Communication, Tohoku University

One of well-established methods to investigate spatial attention of the human visual system is to ask subjects to attend on a location intentionally (endogenous attention). The modulation in attention has been observed by subjective method such as reaction time and detection rate, and objective method such as electroencephalogram (EEG), fMRI or others. We have been comparing several aspects of spatial attention between behavioral measures and EEG measures, focusing on steady state visual evoked potential (SSVEP). Steady state visual evoked potential (SSVEP) is a technique to realize measurement of attentional effect at unattended locations with stimuli tagged by temporal frequency. We have succeeded to measure spatial and temporal characteristics of visual attention using SSVEP. The measurements showed both similarity and differences between the behavioral and EEG measurements and also between different measures of EEG measures. The EEG measures we compares were amplitude of SSVEP and phase of SSVEP as well as event-related potential (ERP). Time course of spatial attention shift estimated by detection rate is similar more to that by SSVEP phase than others, spatial spread of attention is similar to P3 of ERP but very different of either of SSVEP amplitude or SSVEP phase. Measurements of object-based attention showed similar object effect between SSVEP and reaction time. These results suggest that attention modulation is not at a single site of the visual process, but perhaps at multiple processes. Different effects of attention at different processes may be related to different role of attention at different processes.

Unified Audio-Visual Spatial Attention Revealed by Pupillary Light ResponseHsin-I Liao NTT Communication Science Laboratories

Recent evidence shows that pupillary light response (PLR) reflects not only the physical light input to the retina, but also the mind’s eye, i.e., where covert visual attention is directed to (see review in Mathôt & Van der Stigchel, 2015). While visual and auditory systems rely on different peripheral mechanisms to represent locations of distal stimuli in the environment, it remains unclear how the spatial representations of the visual and auditory objects are formed in the brain, and how attention plays a role there. Do audio- and visual-spatial attention share the common mechanism or not? To investigate the issue, we examined whether PLR also reflects spatial attention to auditory object. In series of studies, participants paid attention to an auditory object, which was defined by a spatial (e.g., sounds presented in the left or right ear) or non-spatial (e.g., voices from a male or female talker) cue. Results showed that PLR reflected the focus of spatial attention regardless of whether the auditory object was defined by the spatial or non-spatial cue. Furthermore, the amount of spatial attention induced PLR was modulated by the reliability of the spatial information of the auditory object. Cognitive effort (e.g., task difficulty) or physical gaze position could not explain the result. Taken together, the overall results indicate that PLR reveals not only the focus of covert visual attention but also that of auditory attention. Auditory objects share the common space representation associated with visual spatial attention.

Estimation of attentional location based on the measurement of unconscious eye movements.Hirohiko Kaneko1, Kei Kanari2 1Tokyo Institute of Technology, 2Tamagawa University

Eye and attentional locations are closely related but they are not always the same. Although many eye tracking systems have been developed and used to roughly estimate the location of attention in the scene, but attention tracking system to accurately estimate the location of attention has not been developed yet. In our series of studies, we found that the relationships between the characteristics of eye movements and stimulus properties can be used to estimate attentional location. One of the examples is the relationship between optokinetic nystagmus (OKN) and motion in attention area. We presented two areas with different directions of motion arranged on the left and right, top and bottom, or center and surrounding (concentric) areas in the display. Observers kept their attention to one of the areas by an attention task, which was to count targets appearing on the area. The results indicated that attention enhanced the gain and frequency of OKN corresponding to the attended motion. Another example is small vergence eye movements that occurs when paying attention to an approaching or receding object while fixating a stationary object. The magnitude of the eye movements when paying attention to a certain area was smaller than those when directing eyes to the area, but the relationships between the characteristics of eye movements and stimulus were the same in both cases. Using these relationships, it is possible to determine the attentional location in the visual scene containing objects with various depth and motion. We also mention some applications of the present method for estimating attentional location based on the measurement of unconscious eye movements.

Symposium 3-1

Neural oscillations and behavioral oscillations

Organizers:

Kaoru Amano Center for Information and Neural Networks (CiNet), National Institute

of Information and Communications Technology (NICT)

Rufin VanRullen (Centre de Recherche Cerveau et Cognition (CerCo), CNRS)

Speakers:

Kaoru Amano (CiNet, NICT)

Ryohei Nakayama (The University of Sydney, CiNet, NICT)

Nai Ding (Zhejiang University)

Huan Luo (Peking University)

Rufin VanRullen (Centre de Recherche Cerveau et Cognition (CerCo) , CNRS)

Neural oscillations, such as delta (0.5-4 Hz), theta (4-8 Hz), alpha (8–13 Hz), and gamma (30–100 Hz), are widespread across cortical areas and are related to feature binding, neuronal communication, and memory. Accumulating evidence suggests that alpha oscillations correlate with various aspects of visual processing. Typically, the amplitude of intrinsic alpha oscillation is predictive of the performance on a visual or memory tasks, while the frequency of intrinsic occipital alpha oscillations is reflected in temporal properties of visual perception. Other lines of evidence suggest that behavioral performance such as detection thresholds oscillates at the theta or alpha frequencies. While the connection between neural oscillations and behavior seems to be tight, the underlying mechanisms of these phenomena are not fully understood.

In this symposium, five researchers will present their recent studies on neuronal and/or behavioral oscillations and will discuss the possible functional roles of these oscillations. Dr. Amano will show the causal relationship between intrinsic alpha oscillations and a visual illusion called the motion-induced spatial conflict, possibly suggesting cyclic processing at the frequency of alpha oscillations. Dr. Nakayama will report discretized motion perception at 4-8Hz, which may reflect the slow attentional process. Dr. Ding will present about the relation of temporal attention to sensorimotor processes. Dr Luo will present the evidence suggesting the causal role of temporally ordered reactivations in mediating sequence memory. Finally, Dr. VanRullen will present perceptual echoes originating from alpha oscillations and their relation to predictive coding.

Kaoru Amano Center for Information and Neural Networks(CiNet), National Institute of Information and Communications Technology(NICT)

Although accumulating evidence suggests that alpha oscillations correlate with various aspects of visual processing, the number of studies proving their causal contribution to visual perception is limited. Here we report that illusory visual vibrations are consciously experienced at the frequency of intrinsic alpha oscillations. We employed an illusory jitter perception termed the motion-induced spatial conflict that originates from the cyclic interaction between motion and shape processing. Comparison between the perceived frequency of illusory jitter and the peak alpha frequency (PAF) measured using magnetoencephalography (MEG) revealed that the inter- and intra-participant variations of the PAF are mirrored by an illusory jitter perception. More crucially, psychophysical and MEG measurements during amplitude-modulated current stimulation showed that the PAF can be artificially manipulated, which results in a corresponding change in the perceived jitter frequency. These results suggest the causal contribution of neural oscillations at the alpha frequency in creating temporal characteristics of visual perception. Our results suggest that cortical areas, dorsal and ventral visual areas, in this case, are interacting at the frequency of alpha oscillations. Possible neuroanatomical basis of the inter-individual differences in the PAF and the peak alpha power (PAP) will also be discussed.

Temporal continuity of vision and periodic feature bindingRyohei Nakayama1, 2 1The University of Sydney, 2Center for Information and Neural Networks(CiNet), National Institute of Information and Communications Technology(NICT)

Psychophysical and physiological evidence reveal that sensory information is processed periodically despite the subjective continuity of perception over time. How does the visual system accomplish the subjectively smooth transitions across perceptual moments? To address this issue, we analyzed a novel illusion: a continuously moving Gabor pattern appears temporally discrete when its spatial window moves over a carrier grating that remains stationary or drifts in the opposite direction. This discretization depends on the speed difference between window and grating, but the apparent rhythm is constant at 4-8 Hz regardless of the stimulus speeds (Nakayama, Motoyoshi & Sato, 2018). In the light of recent studies reporting the theta-rhythmic function of attention, we hypothesize that different dimensional features of window and grating whose positional estimates are biased by opposite directional motions would be bound in a periodic manner. Accordingly, we found that temporal binding of visual features is performed periodically at ~8 Hz between spatially separated locations, while depending on pre-stimulus neural oscillatory phases locked by voluntary action (Nakayama & Motoyoshi, under review). (As one would expect from previous studies on pre-attentive binding, such a periodicity was not observed between superimposed locations.) Therefore, periodic attention serves to bind sensory information across different dimensions and locations to produce unified perception, subserved by neural oscillations in synchrony with action. The present combined results imply that slow attentional process can cause the discretized perception, while perhaps fast automatic process may underlie the temporal continuity of vision.

Temporal attention requires sensorimotor mechanisms during visual and auditory processingNai Ding Zhejiang University

We live in a dynamic world and sensory information comes rapidly and overwhelmingly. Temporal attention provides a mechanism to preferentially process time moments that carry more critical sensory information. It has been proposed that the motor system is critical to implement temporal attention and here I will present recent evidence that temporal attention involves sensorimotor processes. It is shown that blinks and related eyelid activity are synchronized to temporal attention. When processing a visual sequence, a task is used to force the participants to attend to specific time moments. We find that blinks are suppressed at the attended time moment and the blink rate rebounds about 700 ms after the attended moment. This phenomenon can be interpreted as a form of active sensing that actively avoids the loss of important visual information caused by blinks. Nevertheless, further evidence from the auditory modality shows that attention-related eyelid activity is a more general intrinsic property of the brain. It is shown that blinks are similarly modulated by temporal attention in auditory tasks. Even when the eyes are closed, eyelid activity measured by EOG is still suppressed at the attended time moment. Furthermore, when listening to speech and performing a speech comprehension task that does not explicitly requires temporal attention, eyelid activity is synchronized to spoken sentences. Taken together, these results suggest that the motor cortex is activated when allocating attention in time and activity in motor cortex can be reflected in eyelid activity.

Serial, compressed memory-related reactivation in human sequence memory: neural and causal evidenceHuan Luo Peking University

Storing temporal sequences of events in short-term memory (i.e., sequence memory) is fundamental to many cognitive functions. However, it is unknown how the sequence order information is maintained and represented in human subjects. I will present two studies in the lab to address the question. First, using electroencephalography (EEG) recordings in combination with a temporal response function (TRF) approach, we probed the item-specific reactivation activities in the delay period when subjects held a sequence of items in working memory. We demonstrate that serially remembered items are successively reactivated, in a backward and time-compressed manner. Moreover, this fast-backward replay is strongly associated with recency effect, a typical behavioral index in sequence memory, thus supporting the essential link between the item-by-item replay and behavior. Based on the neural findings, we further developed a “behavioral temporal interference” approach to manipulate the item-specific reactivations in retention, aiming to disrupt and change the subsequent sequence memory behavior in recalling. Our results show that the temporal manipulation on the replay patterns – synchronization and order reversal –successfully alters the serial position effect, as typically revealed in sequence memory behavior. Taken together, the results constitute converging evidence supporting the causal role of temporally ordered reactivations in mediating sequence memory. We also provide a promising, efficient approach to rapidly manipulate the temporal structure of multiple items held in working memory.

Alpha oscillations, travelling waves and predictive codingRufin VanRullen Centre de Recherche Cerveau et Cognition, CNRS

Alpha oscillations are not strictly spontaneous, like an idling rhythm, but can also respond to visual stimulation, giving rise to perceptual "echoes" of the stimulation sequence. These echoes propagate across the visual and cortical space with specific and robust phase relations. In other words, the alpha perceptual cycles are actually travelling waves. The direction of these waves depends on the state of the system: feed-forward during visual processing, top-down in the absence of inputs. I will tentatively relate these alpha-band echoes and waves to back-and-forth communication signals within a predictive coding system.

Symposium 3-2

Science of facial attractiveness

Organizer:

Tomohiro Ishizu (University College London)

Speakers:

Zaira Cattaneo (University of Milano-Bicocca)

Tomoyuki Naito (Osaka University)

Chihiro Saegusa (Kao Corporation)

Koyo Nakamura (Waseda University)

Tomohiro Ishizu (University College London)

Visual attraction pervades our daily lives. It influences and guides our moods, behaviours, and decisions. Scientists apply psychological and cognitive neuroscientific methods to disentangle the seemingly complex attractiveness evaluation, and the rigorous scientific findings are growing quickly. Facial attractiveness has been a central interest in the science of attraction. In this symposium, we present new insights on attractiveness judgments with a focus on face perception from a wide range of methods including behavioural testing, computational modelling, neuroimaging, and brain-stimulation. We anticipate that it will engage interests of the APCV attendance and that it will draw a large and lively audience.